Understanding how we learn during infancy not only helps us grasp the mechanisms of intellectual development but also inspires the design of training algorithms for machine learning. In a study published in Science in February 2024 [1], researchers examined how children associate language with observed objects by analyzing 61 hours of video recorded by cameras worn by infants. The researchers constructed a contrastive learning model to describe this learning process. Similarly, a 2023 NIPS paper [2] found that infants can spontaneously develop abstract representations from visual input through self-supervised learning. The next question is, how do infants achieve this step by step? This is precisely the question addressed by the new research introduced in this article.

▷ Anderson, Erin M., et al. "An edge-simplicity bias in the visual input to young infants." Science Advances 10.19 (2024): eadj8571.

A paper published on May 10, 2024, in Science Advances [3] employed a similar experimental design, using cameras mounted on infants' heads to directly observe and analyze the visual stimuli in their environments. By comparing the images observed by infants with those perceived by adults, the study found that infants experience unique visual inputs consisting of simple, high-contrast patterns and their edges. These high-contrast patterns are crucial for the development of visual modules in the brain.

▷ Figure 1: Experimental design showing that infants, in early visual development, tend to observe patterns composed of a few high-contrast edges as in figure b. Source: Reference 3.

The traditional assumption in cognitive science is that visual input is fundamentally the same for everyone, regardless of developmental stage. However, the new research, based on data recorded by head-mounted cameras on 10 infants aged 3-13 weeks (5 males) and a control group of 10 adults aged 31-70 years, found that visual input varies with development. The visual experiences of very young infants are specific to their age. They prefer simple, high-contrast scenes (Figure 2), such as broad black stripes and checkerboard patterns.

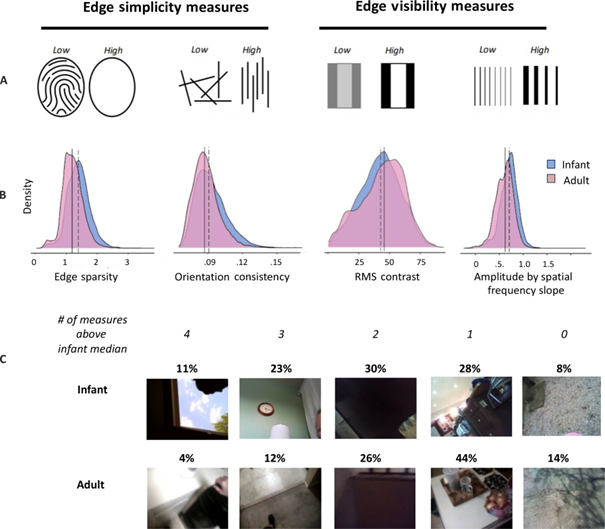

▷ Figure 2: Infants prefer visual input with high contrast and simple edges. Source: Reference 3.

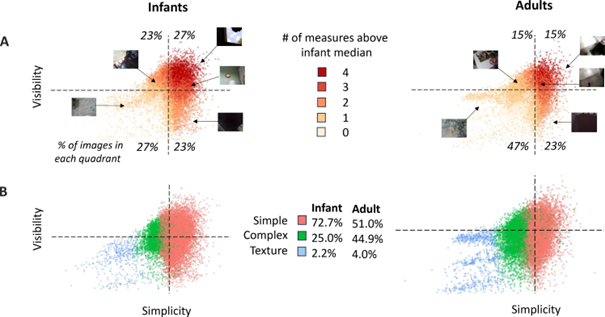

Researchers divided the images into four quadrants based on simplicity and contrast. They found that infants preferred patterns with simple boundaries and high contrast (Figure 3). This provides insight into what attracts the attention of infants.

▷ Figure 3: Proportion of observation of images with different feature combinations by infants and adults. Source: Reference 3.

Given that the V1 area of the visual cortex extracts local edges to help construct meaningful objects and scenes, could infants' visual preferences guide the training of visual models? A 2023 NIPS paper [4] found that using images similar to those observed by infants during early development, rather than random patterns seen by adults, improved the performance of AI systems in visual recognition tasks. This study used images captured by cameras worn by infants, although researchers at the time were unaware of infants' visual preferences. The new research suggests that a preference for simplicity and high contrast might be beneficial for training the V1 area during early visual development. The convolutional neural network architecture used in AI recognition, modeled after the human visual cortex, shows similar characteristics.

The study subjects were children of faculty members from Indiana University Birmingham, living in an artificially constructed environment. This raises the question: could the study's conclusions be limited to infants in such environments, lacking cross-cultural universality? A 2023 study [5] provides a rebuttal by comparing head-mounted camera data from infants in a small, crowded fishing village in Sihai, India. With limited electricity, most daily activities occur outdoors. The results showed no statistical difference between the data observed by infants in Sihai and those in the West, with children from both regions favoring simple, high-contrast patterns.

This research suggests that infants' visual observation patterns could be used to identify and intervene early in the development of diseases such as cataracts, strabismus, refractive errors, and ptosis. These conditions disrupt visual development by interfering with input to the visual cortex, leading to abnormalities. In the future, infants could wear cameras, and algorithms could detect if they do not show a preference for simple and high-contrast images, allowing for low-cost identification of related diseases.

While mammals like horses and sheep can run shortly after birth, human infants take about three months to hear and see, and another six months to control their posture and head slightly. Why do humans require such a long time for their nervous system to mature? The study offers a possible explanation: the visual system first trains the V1 area for edge recognition, followed by training the V2-V6 areas for more abstract representations. This slow, step-by-step optimization helps build a more intelligent and flexible visual system.

Following this reasoning, young primates such as gorillas could be fitted with cameras during their critical period of visual development to see if they exhibit visual preferences similar to human infants. Although no such studies have been found, a 2020 study on various primates, including the smallest primate [6], the mouse lemur, found that the arrangement of visual processing units in the brain follows the same mathematical rules across primates of different sizes.

Therefore, it is reasonable to infer that similar patterns might be observed in primates like gorillas. However, for animals like horses and sheep that can move immediately after birth, it may not be possible to observe a preference for simple and high-contrast patterns in their young. For animals like cats and dogs, which also require a development period (from opening their eyes to normal walking and hunting) but are not primates, it is difficult to predict.

Cross-species comparative studies will reveal how the visual systems of different organisms balance the higher intelligence brought by slow development with the evolutionary advantages of innate abilities. This is a core topic for machine intelligence, extending beyond the visual system to the broader discussion of innate versus acquired traits.