"Zhuang Zhou dreams of being a butterfly, and the butterfly becomes Zhuang Zhou." Throughout the history of both Eastern and Western cultures, humans have shared a common fascination with dreams. Dreams are also a significant part of mysticism, often associated with concepts such as predicting the future and self-discovery. To this day, humans have persistently tried to uncover the meaning behind dreams, frequently resorting to various interpretation methods, leading to the development of numerous schools of thought.

Indeed, dreams offer a glimpse into the depths of our subconscious, revealing hidden desires, fears, and emotions, which can also impact our waking lives. With the rise of technologies such as large language models, AI assistants claiming to interpret dreams through conversation have emerged on platforms like GPT Store.

▷ Figure 1. A dream interpretation website based on large language models: https://dreamybot.com

Unlike folk methods of dream interpretation, neuroscience research on dreams first focuses on the content of dreams, such as the images seen and sounds heard within the dream, before considering their meaning. The research subjects are not just individual anecdotes but rather aggregated narratives from groups of people and the statistical patterns within them. With advancements in technology, research methods also include functional magnetic resonance imaging (fMRI) and other brainwave detection techniques, interpreting dreams based on objective records rather than subjective descriptions. This article will summarize the efforts made from the current state of scientific research to commercial application prospects, exploring how AI can be used as a tool to help people better understand their dreams.

1. Are There Differences Between Boys' and Girls' Dreams? A Summary Based on Personal Narratives

The content of dreams can be attributed to the brain's processing and integration of information collected during the day, which is the basis of the continuity hypothesis proposed by American psychologist Calvin Hall. When we sleep, our brains typically extract fragments from previous memories, experiences, and emotions, piecing them together into a narrative. These complex neural interactions generate the vivid, sometimes perplexing scenes and characters we encounter in our dreams.

The human brain functions like a director specializing in montage, taking seemingly unrelated events, people, and objects from daily experiences and combining them in a way only the subconscious can understand. In dreams, the laws of physics and logic are distorted, creating surreal imagery and stream-of-consciousness narratives that often leave us pondering their deeper meanings upon waking.

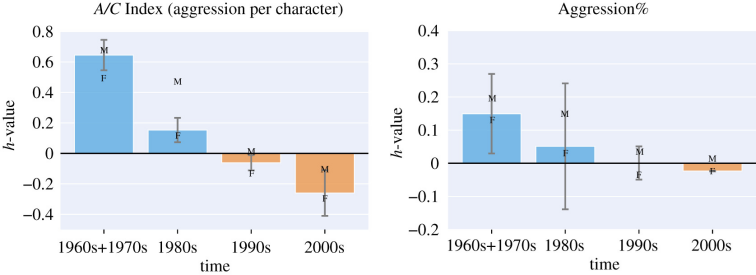

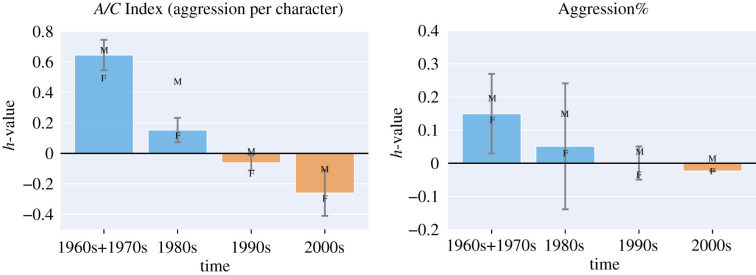

However, scientific research is not about collecting curious anecdotes. Regardless of the discipline, the first step in research is establishing a baseline, understanding the typical conditions of most people to have a suitable anchor point for understanding individual cases. A 2020 study published in the Royal Society Open Science journal analyzed 24,000 self-reported dreams from subjects of different ages and genders using natural language processing, creating the Dream Bank database [1]. The study found that various factors, such as age, gender, and past experiences (e.g., military service), influenced the subjects' dreams (Figure 2). Although the study was based on data collected in the United States and its conclusions may not apply globally due to cultural differences, its research methodology is worth referencing.

▷ Figure 2. The level of aggression in dream reports across different ages has decreased from the 1960s to now, a trend consistent with US violent crime statistics. Source: Reference [1]

Beyond statistical group descriptions, such studies allow people to compare the types of dreams experienced by others in similar situations. For example, in Dreamcatcher, individuals can view each leaf in the diagram below (each click reveals a dream description), alleviating anxiety caused by dreams by understanding the dreams of people in similar circumstances. Users can also upload their dream descriptions to enrich the database.

▷ Figure 3. A dream interpretation and summary website based on natural language processing: https://social-dynamics.net/dreams/

Derived studies based on the Dream Bank database [2] also include analyzing the perplexity of dream narratives using GPT models. Perplexity is a statistical measure of the unexpectedness of text. Using Wikipedia text as a baseline, the research indicated that dream narratives are not as unpredictable as previously thought, with similar perplexity levels. Another finding consistent with common knowledge is that women's dream descriptions have higher perplexity and greater variability, meaning men's dreams are easier to predict than women's.

▷ Figure 4. Comparison of perplexity in dream narratives between men and women. Source: Reference [2]

2. How Do Scientists Read Minds?

Unlike research relying on subjective self-reports, dream visualization attempts to convert the brain's cognitive activities during subconscious or unconscious states into explicit images or labels, such as what is seen, heard, or felt during dreams. This research involves waking subjects during sleep to ask about their dreams to examine their recollections upon waking. Among these, lucid dreaming is a particularly popular topic. A "lucid dream" can be considered a superposition of dreaming and waking states, where the dreamer is aware of dreaming and can describe their dream.

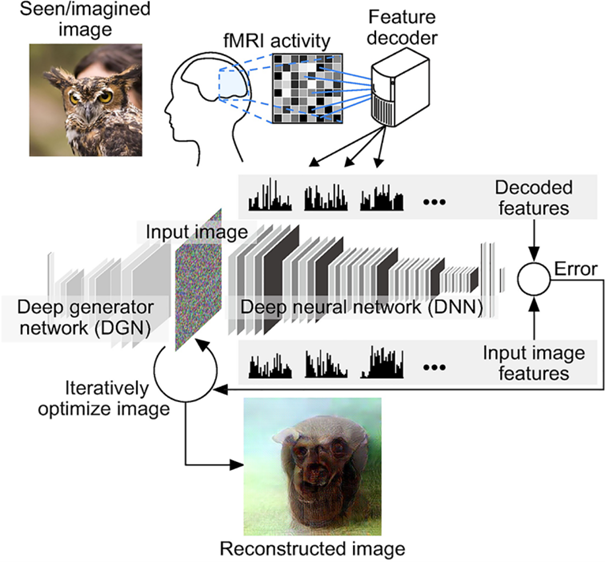

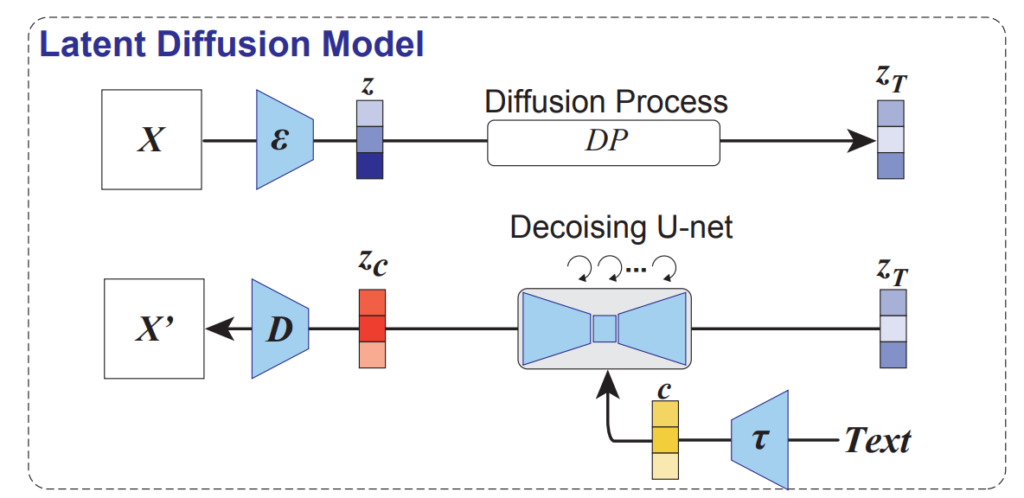

The foundation of dream visualization requires proving that changes in brain patterns, measured by methods such as magnetic resonance imaging (MRI), near-infrared spectroscopy, and electroencephalography (EEG), can predict thoughts. A 2019 study [3] reconstructed images observed by subjects based on brain activity. In 2023, another study [4], using Stable Diffusion, could more accurately reproduce the images seen in the subjects' minds.

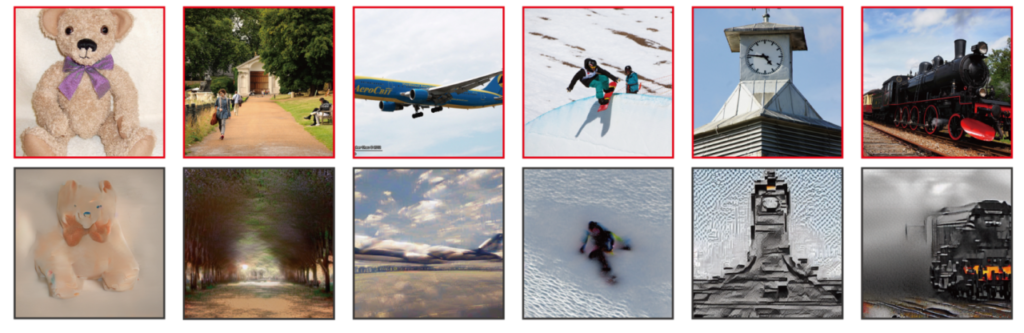

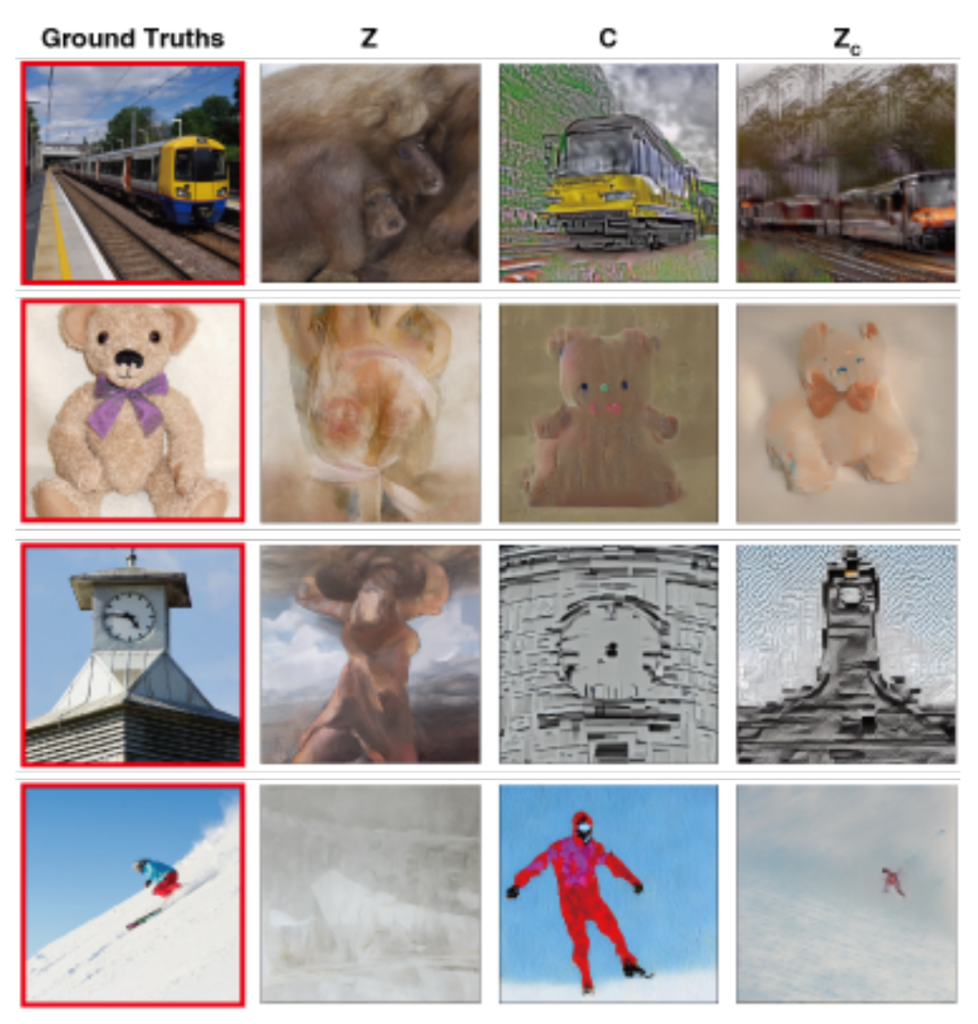

Comparing Figures 5 and 6, the reconstructed images are significantly more accurate. What innovations have contributed to this performance improvement?

▷ Figure 5. Images observed by subjects reconstructed using deep neural networks based on EEG and MRI recordings. Source: Reference [3]

▷ Figure 6. Comparison between real images seen by subjects (first row) and the reconstructed images by the model. Source: Reference [4]

The most noticeable difference between the 2019 and 2023 studies is the addition of semantic decoding in the latter. Before discussing this, let's first look at how image decoding was done in the 2023 study. When processing images, the brain operates like an image undergoing lossy compression and then being enlarged, compressing the image into a basic sketch that retains essential information while simplifying the form for easier management. This compressed information is stored in a distributed manner in the brain, offering scientists a way to decode it. Using fMRI technology, scientists can record which parts of the brain are active, similar to observing which hard drives are active in a supercomputer with tens of thousands of drives when processing specific data, thus establishing a basic model.

In practical brain decoding, researchers first construct a preliminary sketch based on the compressed and distributed information from fMRI data. Then, during the diffusion process, the model gradually renders and colors the sketch, enriching the image. However, as in painting, having a clear target is essential. Reconstructing the imagined image in the brain requires utilizing the semantic information the brain generates after viewing an image.

▷ Figure 7. Model framework diagram from the study. Source: Reference [4]

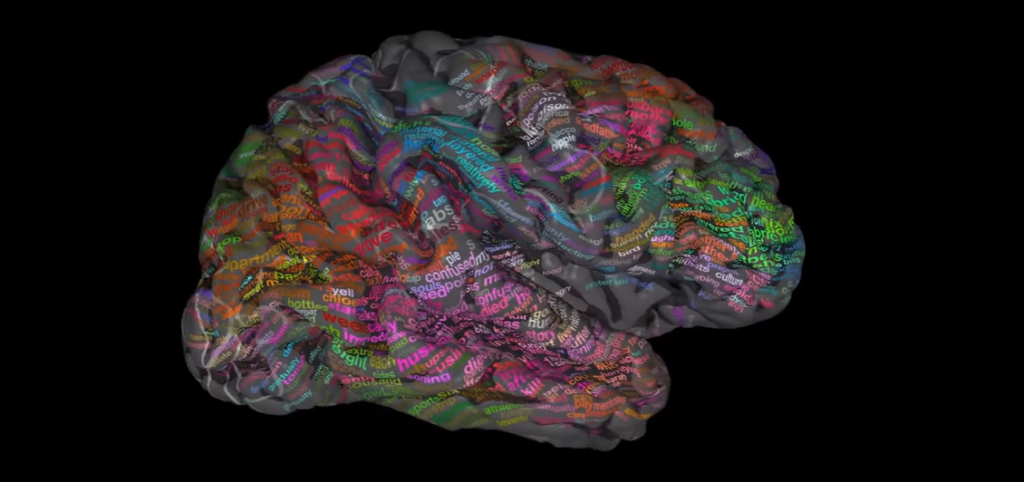

According to the brain's "semantic map" [5], certain words trigger specific fMRI data that show these words distributed around the brain rather than in a single language processing area. Words related to meaning are more concentrated in the brain. The study [4] processed semantic and visual signals using different neural networks, using decoded semantic information to help reconstruct the image.

For example, if a subject sees a picture of a dog, the reconstructed image might lack typical dog features based solely on visual signals, making it look more like a cat. With the help of semantic information, the decoding system identifies words related to "bone" and chooses to emphasize dog features during the potential diffusion process, enhancing the sketch. This combined method of image reconstruction, using both semantic and visual information, achieves clearer and more accurate image restoration than relying on either alone.

▷ Figure 8. A brain semantic map consisting of 985 words. Source: Reference [5]

▷ Figure 9. Comparison of original images seen by subjects and reconstructed images based on only visual features (second column), only semantic features (third column), and both (fourth column). Source: Reference [4]

In recent years, scientists have also used similar approaches to generate sound signals subjects heard based on brainwave data [6], enabling speech-impaired individuals to express their thoughts. Additionally, current research can decode text silently read by subjects [7] and reconstruct scenes from short videos watched by them [8]. These studies demonstrate researchers' ability to read minds based on brain activity.

However, there is still a long way to go in interpreting dreams. First, dreams are often phantasmagorical and erratic, making them seem even more illogical and filled with strange surprises. The earlier study on dream narrative perplexity [2] already provided evidence to the contrary. Second, many dreams involve strong emotions, raising concerns that intense dream emotions might interfere with interpretation.

A 2024 study [9] claimed to combine personal narratives and fMRI to develop a model predicting the emotional content of spontaneous thoughts, determining whether the subjective experience in lucid dreams is positive or negative. This prediction model can predict subjects' self-relatedness and emotional experiences when reading stories and during spontaneous thought (lucid dreaming) or rest states among 199 subjects.

In this study, researchers monitored subjects' brain activity while reading stories to decode the emotional dimensions of thoughts. To capture various thought patterns, subjects engaged in one-on-one interviews to create personalized narrative stimuli reflecting their past experiences and emotions. As subjects read their stories, their brain activity was recorded in an MRI scanner. After the fMRI scan, subjects reread the stories, reporting perceived self-relatedness (how much the content related to them) and emotion (positive or negative) at each moment. Using quintiles of each subject's self-relatedness and emotion scores, the research team created 25 different combinations of emotional and self-relatedness, using machine learning to combine these data with fMRI data from 49 subjects to decode the emotional dimensions of thoughts in real-time.

The study found that key brain regions such as the anterior cingulate cortex and anterior insula were crucial in predicting personal relevance and emotional tone. By decoding emotions unrelated to specific narratives, the study helps us understand the internal states and contexts influencing subjective experiences, potentially revealing individual differences in thought and emotion and aiding in mental health assessment.

3. The Reality and Ideal of Dream Visualization

The aforementioned studies have not directly analyzed the brain during dreaming but have instead attempted to predict what subjects think and see in a waking state (or lucid dream). However, some research has started to explore brain activity during dreams directly and attempt to visualize the images in dreams.

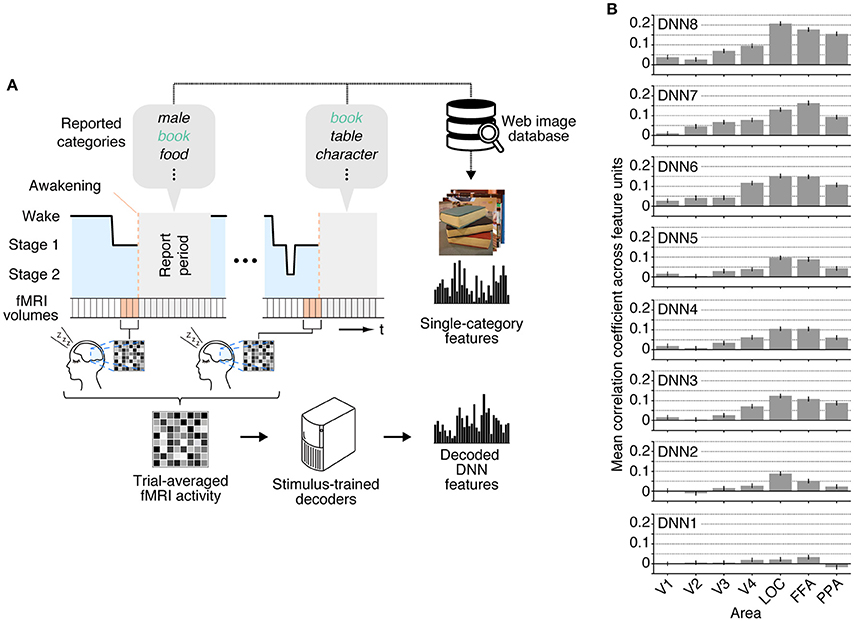

For example, a study [10] based on the brain activity data of two subjects under fMRI and their self-reported dream content revealed that image processing in the brain during dreams is hierarchical. This means that dreaming activates layered visual feature representations related to the dreamed objects. The study showed that feature values decoded from brain activity during dreaming correlated with those related to object categories in the mid-to-high levels of deep neural networks. This not only improved the accuracy of distinguishing object categories in dreams but also suggested that the visual feature representation in dreams is similar to visual processing during wakefulness. This indicates that the brain may use the same mechanisms when dreaming and awake, supporting the generalizability of decoding methods across different visual experiences (wakefulness and dreaming) and demonstrating the feasibility of dream interpretation.

▷ Figure 10. In a single experiment, dream images can be predicted based on higher-level abstract features. Source: Reference [10]

▷ Figure 10. In a single experiment, dream images can be predicted based on higher-level abstract features. Source: Reference [10]

As early as 2013, a study [11] trained a decoding model on brain activity induced by stimuli in the visual cortex, showing that the model's ability to classify dream content exceeded random guessing. In this study, three subjects underwent MRI sleep experiments, where they were awakened whenever brain activity was detected and described their visual experiences before waking. To collect sufficient data, each subject was awakened every 5-6 minutes on average, focusing on hypnagogic visual experiences. In over 75% of awakenings, subjects reported dream content. The predictive model attempted to distinguish objects seen in dreams, such as people or chairs, and used multiple labels in the decoder output to improve accuracy. This is still far from decoding thoughts in dreams as discussed above.

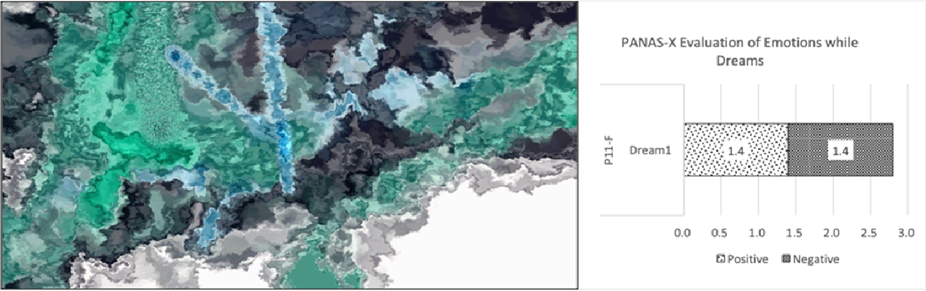

In 2021, a study [12] from Tsinghua University's Future Laboratory, based on EEG data of 11 subjects during REM sleep, combined with psychological questionnaires to assess emotions, ultimately generated abstract visual representations of dreams. Figure 11 shows an artistic representation of a dream based on EEG data, where the emotional keyword was "relieved." This study further demonstrates that current dream interpretation cannot achieve pixel-level accuracy but can only provide qualitative emotional descriptions or make more accurate than random guesses about objects in dreams.

▷ Figure 11. An artistic representation of the emotional content of a dream based on EEG data. Source: Reference [12]

▷ Figure 11. An artistic representation of the emotional content of a dream based on EEG data. Source: Reference [12]

However, the previous studies on brain decoding during wakefulness and the research showing that dreams and the brain follow the same neural mechanisms collectively indicate the technical feasibility of dream interpretation. Currently, limitations such as fMRI machine noise, experimental costs, and the small number of subjects constrain these studies' maturity. Nevertheless, with future technological advancements, this field holds tremendous potential. Dream visualization technology has significant implications for mental health. Understanding dreams could lead to new treatments, especially for conditions such as PTSD, depression, and anxiety.

Beyond psychological interventions, recreating dreams could be used for creative generation. Using creative design to reconstruct dream content can produce more artistically innovative content. Historically, Kekulé discovered the structure of the benzene ring in a dream, and Mendeleev conceptualized the periodic table in a dream. We do not know how many scientific ideas have appeared and silently disappeared in dreams. By recording and interpreting dreams, we might be able to recover more such scientifically innovative dreams.

In summary, EEG and fMRI have transformed dream analysis from mere speculation into empirical scientific exploration, bringing us closer to uncovering the truth about dreams.

4. Commercial Applications of Dream Analysis and Guidance

When it comes to dream interpretation, people are most familiar with the psychoanalytic theories of Freud and Jung. Although widely known, the academic community disputes the effectiveness of these theories. With the maturation of large language models and corresponding intelligent agents, several chatbots claiming to interpret dreams have emerged. However, my personal experience with these bots has been underwhelming. Some applications even claim to combine traditional Chinese medicine with dream interpretation. Readers should critically examine such applications, as they might border on pseudoscience and should not be trusted just because they use large models.

In addition to conversing about dream content, combining AI with dreams also has potential in dream recording applications. Considering that our recollection of dreams often occurs when we are just waking up or half-awake, and a significant portion of dreams vanish after we wake up, a recording tool becomes especially important. The Apple platform’s PlotPilot [13] can record users' verbal descriptions of their dreams and use AI text analysis to add corresponding background music, creating a personalized audiobook. It can now even generate videos based on dream descriptions. Such tools not only help users better understand the psychological needs of their subconscious but also promote the study of dreams.

If predicting personal thoughts based on brainwave activity brings to mind scenes from many science fiction novels, tools that help users enter and stabilize lucid dreams evoke the feel of "Inception." In January 2024, the American startup "Prophetic" developed a new AI model called "Morpheus-1." This model can use brainwave activity as prompts, generating shaped sound waves that interact with the brain state based on a multimodal large model. The output sound waves can be paired with a new headband product, "The Halo," which Prophetic plans to release next spring. The Halo will send sound waves into the brain, connecting with the current brain state, thus bringing the mind into a lucid state. According to Prophetic, “Guided lucid dreams are dreams where the dreamer is aware that they are dreaming.” The company envisions its product allowing users to effectively control their dreams.

As the company is recently founded and the promoted product has not yet been released, the actual capability of its technology remains to be seen. If successful, this development would have significant implications for both academia and the market. Guided lucid dreams could help reduce nightmares induced by psychological conditions like PTSD, promote mindfulness, and open new windows into the mysterious nature of consciousness.

On the other hand, whether guiding or predicting dreams, applications involving personal experiences will undoubtedly generate a vast amount of sensitive data. How can we prevent such technology from being misused? Consumers need to be aware of the double-edged nature of technology and be conscious of protecting their rights and privacy. Before the technology matures, it might be helpful to conduct thought experiments through science fiction, simulating the possible societal impacts of new technological products in various scenarios: for instance, what if future workers had to use lucid dreams to prove their loyalty to the company, or suspicious partners secretly recorded and analyzed their significant other's dreams to find evidence of infidelity? What kind of society would that create?

5. Conclusion

"Life is but a dream…"

From the psychology of Freud and Jung to the latest EEG-based mind-reading techniques and the text analysis of vast dream narratives using large models, our understanding of the brain is transitioning from qualitative to quantitative, from individual cases to group statistics, and from relying on subjective descriptions to objective data. As we gain a better understanding of the mechanisms behind dream generation, it becomes possible to gradually control the occurrence of dreams, thereby preventing or reducing nightmares and even guiding the creation of lucid dreams.

With advancements in the measurement and control of dreams, researchers can scientifically attempt to answer previously unanswerable questions, such as: What are the emotional experiences within dreams? What scenes do animals think of when they dream? Do AIs have the capacity to dream? Knowledge brings forth new technological applications, and new technologies expand the boundaries of accessible knowledge. Once dream research moves beyond pseudoscience and amateur theories, it will embark on the virtuous cycle common to the scientific community.